The umbrella time period “observability” covers all method of topics, from fundamental telemetry to logging, to creating claims about longer-term efficiency within the form of service degree aims (SLOs) and sometimes service degree agreements (SLAs). Right here I’d like to debate some philosophical approaches to defining SLOs, clarify how they assist with prioritization, and description the tooling at present accessible to Betterment Engineers to make this course of somewhat simpler.

At a excessive degree, a service degree goal is a approach of measuring the efficiency of, correctness of, validity of, or efficacy of some element of a service over time by evaluating the performance of particular service degree indicators (metrics of some sort) in opposition to a goal aim. For instance,

99.9% of requests full with a 2xx, 3xx or 4xx HTTP code inside 2000ms over a 30 day interval

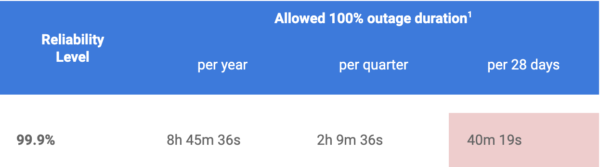

The service degree indicator (SLI) on this instance is a request finishing with a standing code of 2xx, 3xx or 4xx and with a response time of at most 2000ms. The SLO is the goal proportion, 99.9%. We attain our SLO aim if, throughout a 30 day interval, 99.9% of all requests accomplished with a kind of standing codes and inside that vary of latency. If our service didn’t succeed at that aim, the violation overflow — referred to as an “error price range” — exhibits us by how a lot we fell brief. With a aim of 99.9%, we have now 40 minutes and 19 seconds of downtime accessible to us each 28 days. Try extra error price range math right here.

If we fail to satisfy our targets, it’s worthwhile to step again and perceive why. Was the error price range consumed by actual failures? Did we discover plenty of false positives? Perhaps we have to reevaluate the metrics we’re gathering, or maybe we’re okay with setting a decrease goal aim as a result of there are different targets that can be extra necessary to our clients.

That is the place the philosophy of defining and retaining observe of SLOs comes into play. It begins with our customers – Betterment customers – and attempting to offer them with a sure high quality of service. Any error price range we set ought to account for our fiduciary tasks, and will assure that we don’t trigger an irresponsible affect to our clients. We additionally assume that there’s a baseline diploma of software program high quality baked-in, so error budgets ought to assist us prioritize optimistic affect alternatives that transcend these baselines.

Typically there are a couple of layers of indirection between a service and a Betterment buyer, and it takes a little bit of creativity to know what facets of the service immediately impacts them. For instance, an engineer on a backend or data-engineering group supplies companies {that a} user-facing element consumes not directly. Or maybe the customers for a service are Betterment engineers, and it’s actually unclear how that work impacts the individuals who use our firm’s merchandise. It isn’t that a lot of a stretch to assert that an engineer’s degree of happiness does have some impact on the extent of service they’re able to offering a Betterment buyer!

Let’s say we’ve outlined some SLOs and see they’re falling behind over time. We’d check out the metrics we’re utilizing (the SLIs), the failures that chipped away at our goal aim, and, if needed, re-evaluate the relevancy of what we’re measuring. Do error charges for this explicit endpoint immediately replicate an expertise of a person in a roundabout way – be it a buyer, a customer-facing API, or a Betterment engineer? Have we violated our error price range each month for the previous three months? Has there been a rise in Buyer Service requests to resolve issues associated to this particular side of our service? Maybe it’s time to dedicate a dash or two to understanding what’s inflicting degradation of service. Or maybe we discover that what we’re measuring is changing into more and more irrelevant to a buyer expertise, and we will do away with the SLO solely!

Advantages of measuring the proper issues, and staying on course

The aim of an SLO based mostly strategy to engineering is to offer information factors with which to have an inexpensive dialog about priorities (a degree that Alex Hidalgo drives house in his ebook Implementing Service Stage Targets). Within the case of companies not performing properly over time, the dialog is likely to be “give attention to enhancing reliability for service XYZ.” However what occurs if our customers are tremendous pleased, our SLOs are exceptionally well-defined and well-achieved, and we’re forward of our roadmap? Can we attempt to get that further 9 in our goal – or will we use the time to take some inventive dangers with the product (feature-flagged, in fact)? Typically it’s not in our greatest curiosity to be too targeted on efficiency, and we will as an alternative “dissipate our error price range” by rolling out some new A/B check, or upgrading a library we’ve been laying aside for some time, or testing out a brand new language in a user-facing element that we would not in any other case have had the prospect to discover.

Let’s dive into some tooling that the SRE group at Betterment has constructed to assist Betterment engineers simply begin to measure issues.

Gathering the SLIs and Creating the SLOs

The SRE group has a web-app and CLI referred to as `coach` that we use to handle steady integration (CI) and steady supply (CD), amongst different issues. We’ve talked about Coach prior to now right here and right here. At a excessive degree, the Coach CLI generates lots of yaml recordsdata which might be utilized in all kinds of locations to assist handle operational complexity and cloud assets for consumer-facing web-apps. Within the case of service degree indicators (mainly metrics assortment), the Coach CLI supplies instructions that generate yaml recordsdata to be saved in GitHub alongside utility code. At deploy time, the Coach web-app consumes these recordsdata and idempotently create Datadog displays, which can be utilized as SLIs (service degree indicators) to tell SLOs, or as standalone alerts that want quick triage each time they’re triggered.

Along with Coach explicitly offering a config-driven interface for displays, we’ve additionally written a pair useful runtime particular strategies that lead to automated instrumentation for Rails or Java endpoints. I’ll focus on these extra beneath.

We additionally handle a separate repository for SLO definitions. We left this exterior of utility code in order that groups can modify SLO goal targets and particulars with out having to redeploy the applying itself. It additionally made visibility simpler by way of sharing and speaking completely different group’s SLO definitions throughout the org.

Displays in code

Engineers can select both StatsD or Micrometer to measure difficult experiences with customized metrics, and there’s varied approaches to turning these metrics immediately into displays inside Datadog. We use Coach CLI pushed yaml recordsdata to assist metric or APM monitor varieties immediately within the code base. These are saved in a file named .coach/datadog_monitors.yml and appear like this:

displays:

- sort: metric

metric: "coach.ci_notification_sent.accomplished.95percentile"

title: "coach.ci_notification_sent.accomplished.95percentile SLO"

mixture: max

proprietor: sre

alert_time_aggr: on_average

alert_period: last_5m

alert_comparison: above

alert_threshold: 5500

- sort: apm

title: "Pull Requests API endpoint violating SLO"

resource_name: api::v1::pullrequestscontroller_show

max_response_time: 900ms

service_name: coach

web page: false

slack: false

It wasn’t easy to make this abstraction intuitive between a Datadog monitor configuration and a person interface. However this sort of express, attribute-heavy strategy helped us get this tooling off the bottom whereas we developed (and proceed to develop) in-code annotation approaches. The APM monitor sort was easy sufficient to show into each a Java annotation and a tiny area particular language (DSL) for Rails controllers, giving us good symmetry throughout our platforms. . This `proprietor` methodology for Rails apps ends in all logs, error experiences, and metrics being tagged with the group’s title, and at deploy time it’s aggregated by a Coach CLI command and was latency displays with affordable defaults for non-obligatory parameters; primarily doing the identical factor as our config-driven strategy however from throughout the code itself

class DeploysController < ApplicationController proprietor "sre", max_response_time: "10000ms", solely: [:index], slack: false finish

For Java apps we have now the same interface (with affordable defaults as properly) in a tidy little annotation.

@Sla

@Retention(RetentionPolicy.RUNTIME)

@Goal(ElementType.METHOD)

public @interface Sla {

@AliasFor(annotation = Sla.class)

lengthy quantity() default 25_000;

@AliasFor(annotation = Sla.class)

ChronoUnit unit() default ChronoUnit.MILLIS;

@AliasFor(annotation = Sla.class)

String service() default "custody-web";

@AliasFor(annotation = Sla.class)

String slackChannelName() default "java-team-alerts";

@AliasFor(annotation = Sla.class)

boolean shouldPage() default false;

@AliasFor(annotation = Sla.class)

String proprietor() default "java-team";

}

Then utilization is simply so simple as including the annotation to the controller:

@WebController("/api/stuff/v1/service_we_care_about")

public class ServiceWeCareAboutController {

@PostMapping("/search")

@CustodySla(quantity = 500)

public SearchResponse search(@RequestBody @Legitimate SearchRequest request) {...}

}

At deploy time, these annotations are scanned and transformed into displays together with the config-driven definitions, similar to our Ruby implementation.

SLOs in code

Now that we have now our metrics flowing, our engineers can outline SLOs. If an engineer has a monitor tied to metrics or APM, then they only have to plug within the monitor ID immediately into our SLO yaml interface.

- last_updated_date: "2021-02-18"

approval_date: "2021-03-02"

next_revisit_date: "2021-03-15"

class: latency

sort: monitor

description: This SLO covers latency for our CI notifications system - whether or not it is the github context updates in your PRs or the slack notifications you obtain.

tags:

- group:sre

thresholds:

- goal: 99.5

timeframe: 30d

warning_target: 99.99

monitor_ids:

- 30842606

The interface helps metrics immediately as properly (mirroring Datadog’s SLO varieties) so an engineer can reference any metric immediately of their SLO definition, as seen right here:

# availability

- last_updated_date: "2021-02-16"

approval_date: "2021-03-02"

next_revisit_date: "2021-03-15"

class: availability

tags:

- group:sre

thresholds:

- goal: 99.9

timeframe: 30d

warning_target: 99.99

sort: metric

description: 99.9% of guide deploys will full efficiently over a 30day interval.

question:

# (total_events - bad_events) over total_events == good_events/total_events

numerator: sum:hint.rack.request.hits{service:coach,env:manufacturing,resource_name:deployscontroller_create}.as_count()-sum:hint.rack.request.errors{service:coach,env:manufacturing,resource_name:deployscontroller_create}.as_count()

denominator: sum:hint.rack.request.hits{service:coach,resource_name:deployscontroller_create}.as_count()

We love having these SLOs outlined in GitHub as a result of we will observe who’s altering them, how they’re altering, and get overview from friends. It’s not fairly the interactive expertise of the Datadog UI, nevertheless it’s pretty simple to fiddle within the UI after which extract the ensuing configuration and add it to our config file.

Notifications

After we merge our SLO templates into this repository, Coach will handle creating SLO assets in Datadog and accompanying SLO alerts (that ping slack channels of our alternative) if and when our SLOs violate their goal targets. That is the marginally nicer a part of SLOs versus easy displays – we aren’t going to be pinged for each latency failure or error price spike. We’ll solely be notified if, over 7 days or 30 days and even longer, they exceed the goal aim we’ve outlined for our service. We will additionally set a “warning threshold” if we need to be notified earlier once we’re utilizing up our error price range.

Fewer alerts means the alerts ought to be one thing to pay attention to, and probably take motion on. It is a nice approach to get a superb sign whereas lowering pointless noise. If, for instance, our person analysis says we must always intention for 99.5% uptime, that’s 3h 21m 36s of downtime accessible per 28 days. That’s lots of time we will fairly not react to failures. If we aren’t alerting on these 3 hours of errors, and as an alternative simply as soon as if we exceed that restrict, then we will direct our consideration towards new product options, platform enhancements, or studying and improvement.

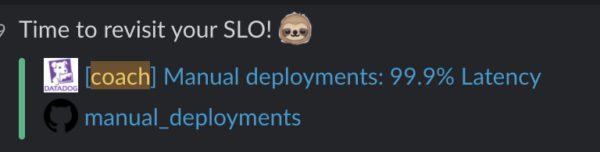

The final a part of defining our SLOs is together with a date once we plan to revisit that SLO specification. Coach will ship us a message when that date rolls round to encourage us to take a deeper take a look at our measurements and probably reevaluate our targets round measuring this a part of our service.

What if SLOs don’t make sense but?

It’s undoubtedly the case {that a} group may not be on the degree of operational maturity the place defining product or user-specific service degree aims is within the playing cards. Perhaps their on-call is admittedly busy, possibly there are lots of guide interventions wanted to maintain their companies operating, possibly they’re nonetheless placing out fires and constructing out their group’s techniques. Regardless of the case could also be, this shouldn’t deter them from gathering information. They’ll outline what is named an “aspirational” SLO – mainly an SLO for an necessary element of their system – to begin gathering information over time. They don’t have to outline an error price range coverage, they usually don’t have to take motion once they fail their aspirational SLO. Simply control it.

Another choice is to begin monitoring the extent of operational complexity for his or her techniques. Maybe they will set targets round “Bug Tracker Inbox Zero” or “Failed Background Jobs Zero” inside a sure time-frame, per week or a month for instance. Or they will outline some SLOs round varieties of on-call duties that their group tackles every week. These aren’t essentially true-to-form SLOs however engineers can use this framework and tooling offered to gather information round how their techniques are working and have conversations on prioritization based mostly on what they uncover, starting to construct a tradition of observability and accountability

Betterment is at a degree in its progress the place prioritization has turn into tougher and extra necessary. Our techniques are typically steady, and have improvement is paramount to enterprise success. However so is reliability and efficiency. Correct reliability is the best operational requirement for any service2. If the service doesn’t work as meant, no person (or engineer) can be pleased. That is the place SLOs are available in. SLOs ought to align with enterprise aims and wishes, which can assist Product and Engineering Managers perceive the direct enterprise affect of engineering efforts. SLOs will make sure that we have now a stable understanding of the state of our companies by way of reliability, they usually empower us to give attention to person happiness. If our SLOs don’t align immediately with enterprise aims and wishes, they need to align not directly through monitoring operational complexity and maturity.

So, how will we select the place to spend our time? SLOs (service degree aims) – together with managing their error budgets – will allow us – our product engineering groups – to have the proper conversations and make the proper selections about prioritization and resourcing in order that we will steadiness our efforts spent on reliability and new product options, serving to to make sure the long run happiness and confidence of our customers (and engineers).

2Alex Hidalgo, Implementing Service Stage Targets

This text is a part of Engineering at Betterment.

These articles are maintained by Betterment Holdings Inc. and they aren’t related to Betterment, LLC or MTG, LLC. The content material on this text is for informational and academic functions solely. © 2017–2021 Betterment Holdings Inc.

Source link