Tech corporations don’t simply wish to establish you utilizing facial recognition — additionally they wish to learn your feelings with the assistance of AI. For a lot of scientists, although, claims about computer systems’ capability to know emotion are basically flawed, and slightly in-browser net sport constructed by researchers from the College of Cambridge goals to point out why.

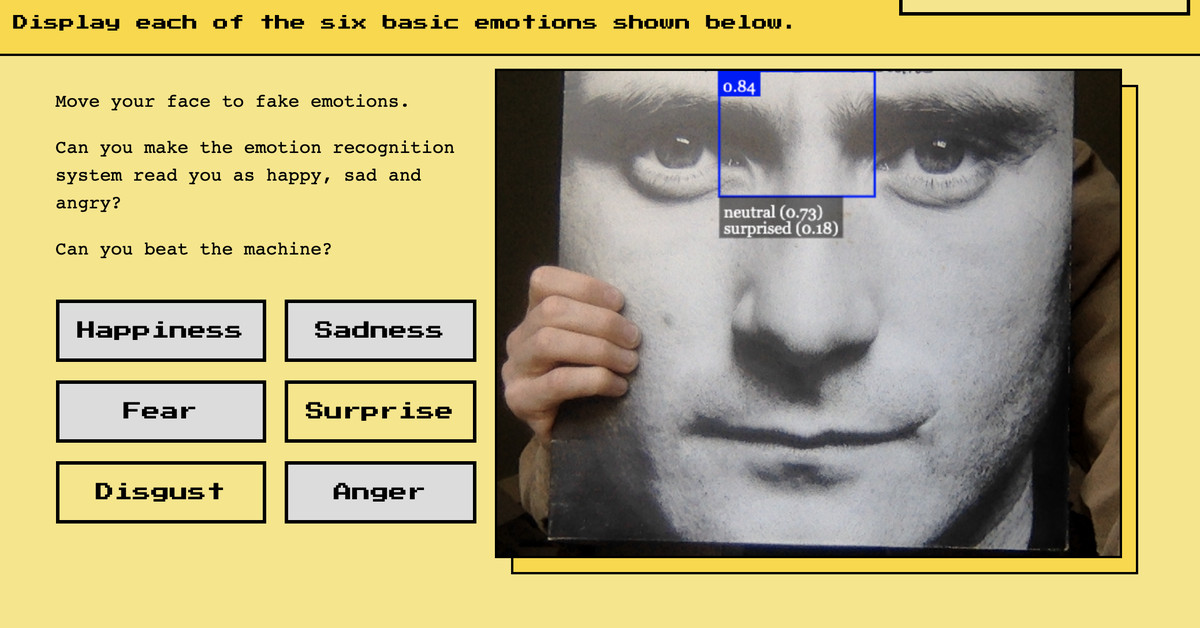

Head over to emojify.information, and you may see how your feelings are “learn” by your pc by way of your webcam. The sport will problem you to provide six totally different feelings (happiness, unhappiness, concern, shock, disgust, and anger), which the AI will try to establish. Nonetheless, you’ll in all probability discover that the software program’s readings are removed from correct, typically deciphering even exaggerated expressions as “impartial.” And even while you do produce a smile that convinces your pc that you simply’re blissful, you’ll know you have been faking it.

That is the purpose of the positioning, says creator Alexa Hagerty, a researcher on the College of Cambridge Leverhulme Centre for the Way forward for Intelligence and the Centre for the Examine of Existential Danger: to show that the fundamental premise underlying a lot emotion recognition tech, that facial actions are intrinsically linked to adjustments in feeling, is flawed.

“The premise of those applied sciences is that our faces and interior emotions are correlated in a really predictable manner,” Hagerty tells The Verge. “If I smile, I’m blissful. If I frown, I’m offended. However the APA did this huge assessment of the proof in 2019, and so they discovered that individuals’s emotional house can’t be readily inferred from their facial actions.” Within the sport, says Hagerty, “you’ve an opportunity to maneuver your face quickly to impersonate six totally different feelings, however the level is you didn’t inwardly really feel six various things, one after the opposite in a row.”

A second mini-game on the positioning drives dwelling this level by asking customers to establish the distinction between a wink and a blink — one thing machines can’t do. “You may shut your eyes, and it may be an involuntary motion or it’s a significant gesture,” says Hagerty.

Regardless of these issues, emotion recognition expertise is quickly gaining traction, with corporations promising that such methods can be utilized to vet job candidates (giving them an “employability rating”), spot would-be terrorists, or assess whether or not industrial drivers are sleepy or drowsy. (Amazon is even deploying related expertise in its personal vans.)

In fact, human beings additionally make errors after we learn feelings on folks’s faces, however handing over this job to machines comes with particular disadvantages. For one, machines can’t learn different social clues like people can (as with the wink / blink dichotomy). Machines additionally typically make automated choices that people can’t query and might conduct surveillance at a mass scale with out our consciousness. Plus, as with facial recognition methods, emotion detection AI is usually racially biased, extra ceaselessly assessing the faces of Black folks as exhibiting unfavorable feelings, for instance. All these elements make AI emotion detection rather more troubling than people’ capability to learn others’ emotions.

“The hazards are a number of,” says Hagerty. “With human miscommunication, we now have many choices for correcting that. However when you’re automating one thing or the studying is finished with out your information or extent, these choices are gone.”

Source link